December 12, 2017 / by Robin Scheibler / 4 comments

FFT on the ESP32

I recently started playing with the ESP8266, which was nice but somewhat limited, and now its older brother, the ESP32. Given their price and their I2S module, they make very nice platforms to start playing with audio processing and distributed microphone arrays. Just get an SPH0645 I2S microphone and get started! Amazing.

A fixture of audio processing is of course the Short Time Fourier Transform, a kind of time-frequency decomposition that relies on the Fast Fourier Transform. Since I described almost four year ago FFT algorithms for real data, I thought it would be nice to check the performance of FFT on the ESP32.

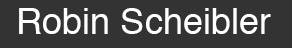

You can find my FFT code on github. I implemented a basic radix-2, a split-radix, and a couple of larger base cases (n=4, n=8). For simplicity and to avoid the costly bit-reversal, I opted for an out-of-place transform. All cosine/sine are precomputed and stored in a look-up table.

I run the algorithm a large number of times and for different transform sizes and measured the average runtime. The results can be seen below. It turns out that even this vanilla implementation is fairly fast on the ESP32. Even a large size like 4096 only takes a few milliseconds to process.

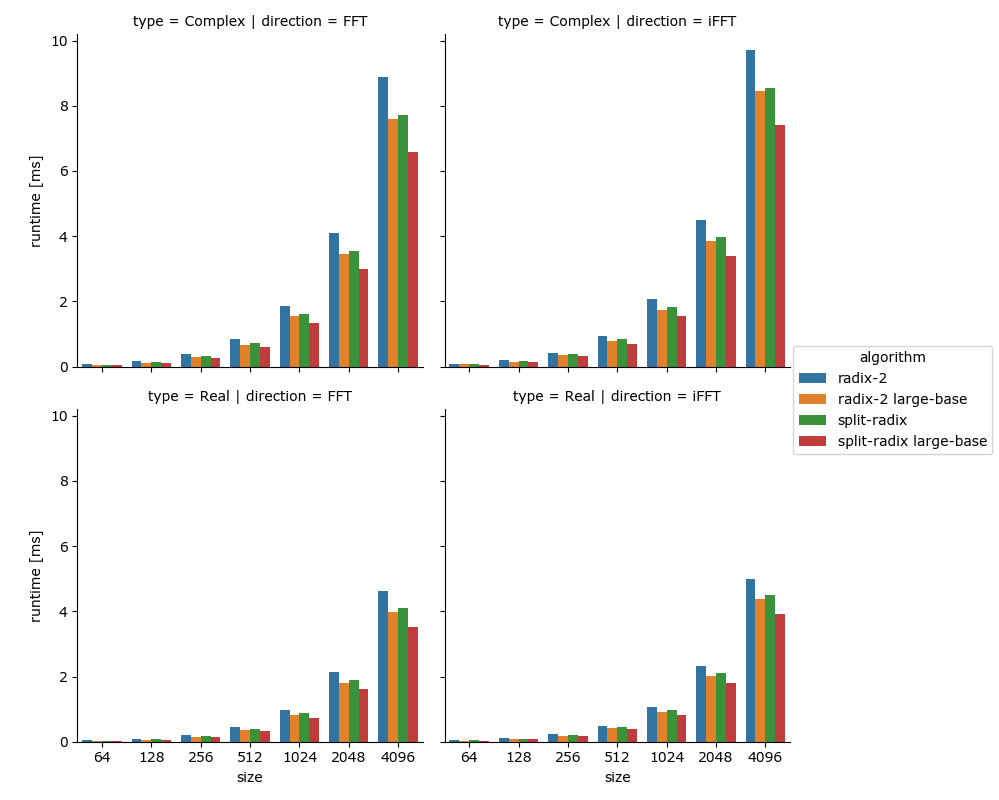

For audio application, it is interesting to know wether or not real-time processing is doable, and how much resources is eaten up by the FFT alone. Here we will assume we do frame processing with a real FFT twice that size. We take the ratio of the cumulative runtime of FFT and iFFT to the duration of the frame at a given sampling frequency. If this number is larger than 100%, real-time processing is not possible. Luckily, this won’t be the case here.

We see that up to 44 kHz and frame size of 2048 samples, we never use more than 16% of the time available, which leaves quite some time do all kind of other processing. Thus real-time audio processing is doable on the ESP32. On top of that, the ESP32 is dual core and all the above results are computed on the usage of one core only. It is then perfectly possible to run one core for audio processing and one core for network and control operations.